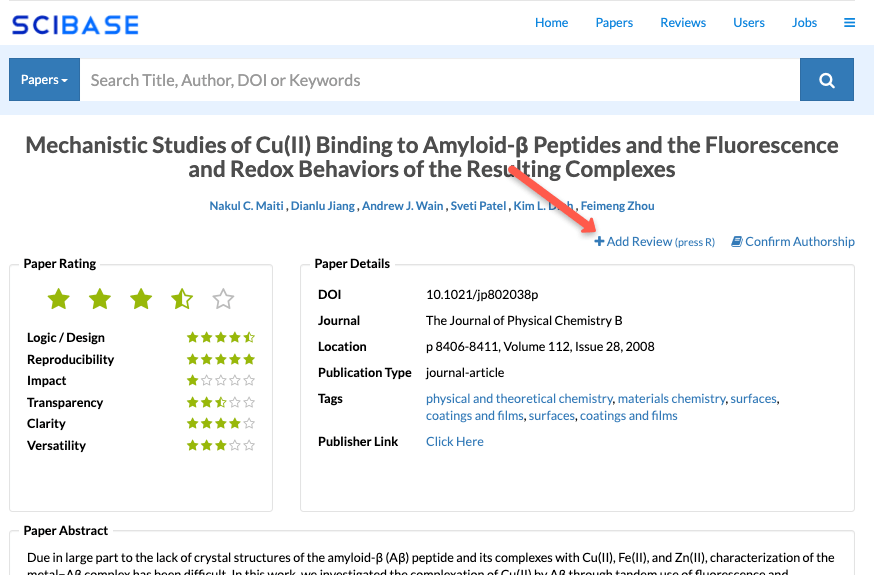

Add a Review

- To add a review, simply search for your paper.

- DOI is best but the full title also usually works.

- When you find it, click on the Add a Review link as shown below.

In 2012, Amgen researchers published a paper in Nature that discussed the high rate of failure the scientific community had had in translating their understanding of cancer into effective clinical treatments. Despite the tens of thousands of researcher hours and the hundreds of millions of dollars spent on developing them, the success rate of clinical interventions had proven remarkably low.

In an attempt to identify the cause of these challenges, these researchers then took a simple but audacious step: they decided that they would try to reproduce, for themselves, many of the findings in basic cancer research. After careful consideration, they chose 53 papers - published in some of the most prestigious scientific journals - that were recognized as "foundational" in the field. The team spent countless hours and millions of dollars to conduct this study - and to share its findings with the world - in the hopes that it would further humanity's efforts in combating one of the most pernicious diseases of the 21st century.

And so, when the last of the reproduction attempts had been completed, the data analyzed and debated, and the results finalized, what were the results? Which of the findings had stood up to scrutiny and which had failed to be reconfirmed? In the end, out of 53 papers that had guided hundreds of millions of dollars of funding and countless hours of researcher effort, how many papers were they able to successfully reproduce?

Six.

Just six.

The scientific method - exploration, hypothesis, controlled experimentation, and sharing of the results - has been, quite literally, the most powerful methodological advance in human history and at the root of virtually all technology that dominates modern life. The culture of modern scientific communication, however - publish or perish incentives in academia, scientific publication being dominated by a few large publishers who decide what gets published and what doesn't, and a general lack of transparency and accountability to the larger scientific community for political or commercial reasons - can lead to tremendous misallocation of money, talent, and public attention, and can act as a significant impediment to scientific progress.

Changes in both cultural norms and information technology are beginning to change these things; the advent of open-access journals and scientific social networks are steps in the right direction, as are other previous attempts - whether journals or web sites - to address the issue of scientific reproducibility. However, we believe they have not yet succeeded in addressing systemic issues on a large scale for a few reasons:

It is with the above in mind that we're trying to start our own platform for addressing these issues. We call it SciBase. SciBase is meant to be a platform for crowdsourced reviews of scientific papers; what Yelp is to restaurants or Amazon reviews are for products, we want to be for scientific work.

The basic premise is pretty simple: scientists provide their reviews of papers they themselves have tried to reproduce. In turn, other scientists rate that scientist's papers and reviews, and the best-rated papers, reviews, and scientists rise to the top. For researchers contributing content, this makes it easier for them to get recognized for work that might not otherwise get much attention (if published in a less widely-read journal) or at all (most journals won't publish a reproduction attempt by itself). And - as the database gets built - it can help many scientists hone in on the best papers or find new papers they might have missed.

You could say that we started SciBase as an experiment. Our hypothesis? That if we could create a platform that facilitated transparency and accountability in science by providing better data while also addressing each of the points above, we could succeed in creating a systemic change in the way science is conducted and communicated. As with any experiment, SciBase could fail. This is a tricky problem that (we don't believe) anyone has gotten right just yet, and - though we believe someone will get it right at some point - it may not be us.

With that said, what steps are we taking to address what we believe were a few causes of failure previously?

Regarding critical mass, we're starting by focusing only on the general areas of chemistry, materials, molecular biology, and biotechnology and devoting all of our resources to getting as much traction as we can in those areas. We'd rather have 50 reviews all in the area of amine synthetic chemistry than have 25 in psychology, 25 in sociology, 25 in economics, and 25 in physics.

Regarding incentives, we're putting a lot of effort into trying to make contributing to the platform worthwhile. Contribute high-quality content and we'll make sure that your work gets ranked highly on Google and other search engines, that other scientists are aware of your work, and that you'll be first in line for job openings that employers post on our site. Heck, we'll even try to help get your work published in traditional journals, as well as a few other ideas we have that we're still brainstorming. If you're an employer or hiring manager, we'll help do your recruiting for you, with much higher quality and often at lower rates than industry norms.

Regarding political realities, we're working hard to try to balance privacy and accountability, such as allowing users to post anonymously or under an alias but still keep the quality of their contributions tied to their public-facing profile and provide enough information about a contributor to help other users make decisions about how much weight they should give someone's review. We also have ways to identify and discourage users from being indiscriminately critical (say to a 'rival') or praiseworthy (say to a friend).

Regarding balancing new and old, while we'll soon provide the ability for scientists to publish completely new work, we're actually first focused on allowing scientists to find and review previously published work, since that's still where the vast majority of scientific knowledge resides.

Finally, our commitment to you is that if you're a scientist and willing to share your knowledge with the world by contributing high-quality content to the site yourself, SciBase will remain absolutely 100% free. Forever.

If you read everything above, you probably have one of a few reactions. Maybe you're skeptical. Maybe you're excited. The only thing we know for sure is that - if you're read this far - you're at least interested. And we thank you for that, because we need as many people as possible interested in this topic. But we need more than people interested; we need them engaged. As mentioned earlier, the key to making this work is quickly getting to a critical mass of users and reviews. If we do, this will work. If not, it won't.

To do this, we 100% absolutely need your help. So we're asking: please help us if you can. Here's how:

We feel strongly that improving transparency and accountability of science is something that can have significant positive benefits for both science and society. SciBase is our experiment in an attempt to find a solution.

We don't know if it's going to work, but we think it's worth trying.

Please help if you can.

Warm Regards,

The SciBase Team

Keep up to date with our experiment.